笔记04:修复Webrtc toI420()未释放引发的内存泄露

修复Webrtc toI420()未释放引发的内存泄露

·

困扰了好几天终于修复了,还是学艺不精啊!

泄露版本:adb shell top查看 %MEM显著增高且在70左右应用崩溃

decoder = WebRTCDecoder { frame ->

// 处理解码后的视频帧

val videoFrame = VideoFrame(frame.buffer.toI420(),

frame.rotation, frame.timestampNs)

capturerObserver?.onFrameCaptured(videoFrame)

}修复版本:%MEM稳定在7左右

decoder = WebRTCDecoder { frame ->

// 创建新VideoFrame

val i420Buffer = frame.buffer.toI420()

try {

val videoFrame = VideoFrame(i420Buffer, frame.rotation, frame.timestampNs)

capturerObserver?.onFrameCaptured(videoFrame)

} finally {

// 释放I420缓冲区 !关键修复

i420Buffer?.release()

}

}公司硬件设备的摄像头是外置的,不适用于webrtc提供的获取videocapture的方法,需要自定义;

现有回调方法可以获取H264裸流,需要将包给Webrtc的解码器解码出帧数据并填充到capturerObserver.onFrameCaptured() 从而实现摄像头画面的显示和远端传输

class XCameraVideoCapturer : VideoCapturer {

private var capturerObserver: CapturerObserver? = null

private var decoder: WebRTCDecoder? = null

/**

* 外部调用初始化传入,创建VideoSource使用其的observe

* val videoSource = peerConnectionFactory.createVideoSource(false)

* videoCapturer.initialize(null, null, videoSource.capturerObserver)

**/

override fun initialize(

surfaceTextureHelper: SurfaceTextureHelper?,

context: Context?,

observer: CapturerObserver

) {

this.capturerObserver = observer

initDecoder()

}

private fun initDecoder() {

decoder = WebRTCDecoder { frame ->

// 转为i420生成新的videoFrame,否则类型不对不显示画面

val i420Buffer = frame.buffer.toI420()

try {

val videoFrame = VideoFrame(i420Buffer, frame.rotation, frame.timestampNs)

capturerObserver?.onFrameCaptured(videoFrame)

} finally {

// 必须释放,否则Native内存溢出

i420Buffer?.release()

}

}

}

// 获取数据包的回调方法

override fun onXCameraH264Packet(packet: H264Packet) {

// 解码数据包

decoder.feed(packet)

}

...

}自定义解码器:

class WebRTCDecoder(

private val frameProcessor: (VideoFrame) -> Unit

) : VideoDecoder.Callback {

// 用于存储编解码器线程的引用

private var codecThread: HandlerThread? = null

// 使用 lazy 初始化确保线程安全

private val codecHandler: Handler by lazy { createCodecHandler() }

private var nativeDecoder: VideoDecoder? = null

private val factory = HardwareVideoDecoderFactory(null)

private val h264Info = VideoCodecInfo("H264", mapOf(

"max-decode-buffers" to "10",

"drop-frames" to "on",

"low-latency" to "true"

), null)

// NAL单元起始码

private val delimiter = byteArrayOf(0x00, 0x00, 0x00, 0x01)

// 解码器状态

private var isInitialized = false

private var spsData: ByteArray? = null

private var ppsData: ByteArray? = null

init {

initDecoder()

}

fun setDecodeStatus(execute: Boolean) {

isInitialized = execute

}

/**

* 创建编解码器线程的 Handler

*/

private fun createCodecHandler(): Handler {

val thread = HandlerThread("WebRTC-Codec-Thread").apply { start() }

codecThread = thread

return Handler(thread.looper)

}

/**

* 初始化解码器

*/

private fun initDecoder() {

nativeDecoder = factory.createDecoder(h264Info)

?: throw IllegalStateException("Failed to create webrtc H.264 decoder")

runOnCodecThread {

val settings = VideoDecoder.Settings(1, 1920, 1080)

val codecStatus = nativeDecoder?.initDecode(settings, this)

isInitialized = codecStatus == VideoCodecStatus.OK

reInitDecoderIfError(codecStatus)

}

}

/**

* 解码 H264Packet

*/

fun feed(packet: H264Packet) {

if (!isInitialized) return

when (packet.nalUnitType) {

NalUnitType.SPS -> spsData = extractNalPayload(packet)

NalUnitType.PPS -> ppsData = extractNalPayload(packet)

NalUnitType.IDR -> decodeKeyFrame(packet)

else -> {

if (spsData == null || ppsData == null) {

return

} else {

decodeRegularFrame(packet)

}

}

}

}

private fun extractNalPayload(packet: H264Packet): ByteArray {

return packet.frame.copyOfRange(packet.startCodeSize, packet.frame.size)

}

private fun decodeKeyFrame(packet: H264Packet) {

val sps = spsData ?: return

val pps = ppsData ?: return

// 创建包含SPS、PPS和IDR帧的完整关键帧

val frameBuffer = ByteBuffer.allocateDirect(

sps.size + pps.size + packet.frame.size + delimiter.size * 2)

frameBuffer.apply {

put(delimiter)

put(sps)

put(delimiter)

put(pps)

put(packet.frame)

flip()

}

decodeFrame(frameBuffer, packet.presentationTimeUs, true)

}

private fun decodeRegularFrame(packet: H264Packet) {

decodeFrame(packet.buffer, packet.presentationTimeUs, false)

}

private fun decodeFrame(buffer: ByteBuffer, ptsUs: Long, isKeyFrame: Boolean) {

val frame = EncodedImage.builder()

.setBuffer(buffer, null)

.setCaptureTimeNs(ptsUs * 1000) // μs → ns

.setFrameType(

if (isKeyFrame)

EncodedImage.FrameType.VideoFrameKey

else

EncodedImage.FrameType.VideoFrameDelta

)

.createEncodedImage()

runOnCodecThread {

val codecStatus = nativeDecoder?.decode(frame, VideoDecoder.DecodeInfo(false, ptsUs))

reInitDecoderIfError(codecStatus)

}

}

/**

* 解码器出错重试

*/

private fun reInitDecoderIfError(codecStatus: VideoCodecStatus?) {

when (codecStatus) {

VideoCodecStatus.ERROR -> {

resetInitStatus()

initDecoder()

}

else -> {}

}

}

/**

* 重置初始化状态

*/

private fun resetInitStatus() {

isInitialized = false

spsData = null

ppsData = null

}

/**

* 释放解码器资源

*/

fun release() {

runOnCodecThread {

resetInitStatus()

nativeDecoder?.release()

nativeDecoder = null

codecThread?.quitSafely()

codecThread = null

}

}

/**

* 确保在编解码器线程执行代码

*/

private fun runOnCodecThread(block: () -> Unit) {

// 如果已经在编解码器线程,直接执行

if (Thread.currentThread() == codecHandler.looper.thread) {

block()

return

}

// 异步执行

codecHandler.post(block)

}

override fun onDecodedFrame(frame: VideoFrame, decodeTimeMs: Int, qp: Int?) {

frameProcessor(frame)

}

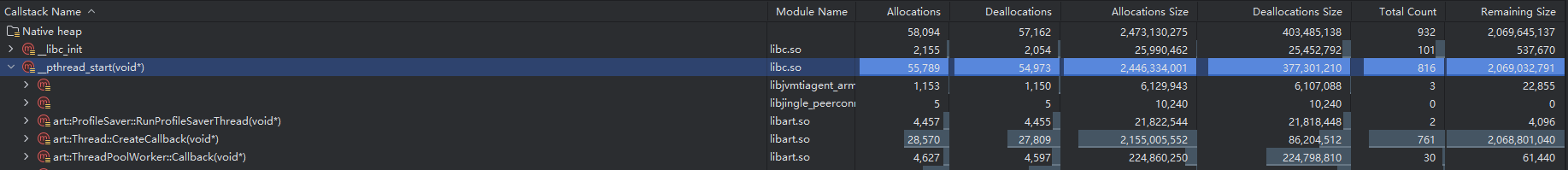

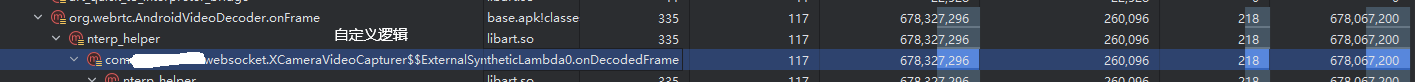

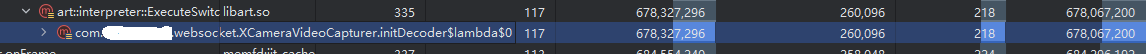

}找了很久以为是解码器哪里的缓冲区泄露了,结果是在回调里,听从deepseek的建议用profiler分析 Native内存情况完成了定位:

找到Remaining size最大的heap依次向深处寻找直到你实现的逻辑

可以确认是XCameraVideoCapturer的initDecoder lambda发生泄露

火山引擎开发者社区是火山引擎打造的AI技术生态平台,聚焦Agent与大模型开发,提供豆包系列模型(图像/视频/视觉)、智能分析与会话工具,并配套评测集、动手实验室及行业案例库。社区通过技术沙龙、挑战赛等活动促进开发者成长,新用户可领50万Tokens权益,助力构建智能应用。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)