ontology-本体学习记录

本文探讨了本体(Ontology)与检索增强生成(RAG)在大型语言模型(LLM)中的协同作用。通过结构化本体为RAG提供精确的知识源,可显著提升LLM的响应准确性、推理能力和领域专业性。研究对比了两种方法:直接使用本体微调LLM,或通过ON-RAG将本体检索结果作为LLM输入上下文。后者能有效减少幻觉,增强回答可验证性。微软开源的OG-RAG系统实现了这一框架,为LLM注入结构化知识提供了可行方

LLM ONTOLOGY AND RAG基本关系

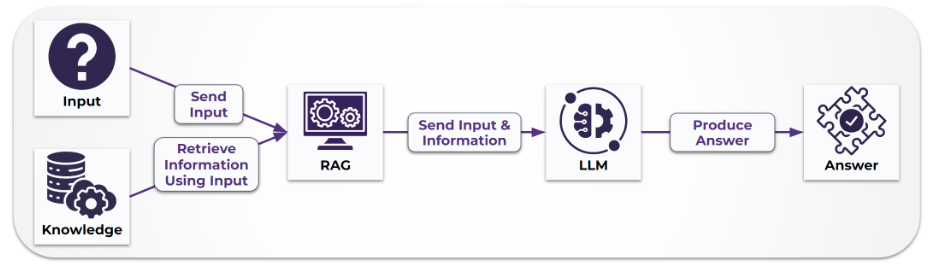

The ontology acts as the “knowledge source” for the RAG process, which retrieves relevant facts and relationships from the ontology to provide context to the LLM

- LLM: The large language model is the final engine that generates the response.

- Ontology: An ontology provides a formal, structured representation of knowledge, defining entities and their relationships in a specific domain. This structured data is more precise than simple text documents.

- RAG: The RAG process acts as the bridge between the ontology and the LLM.

- When a user asks a question, the RAG system uses the query to search the ontology for relevant facts and relationships.

- The retrieved, structured information from the ontology is then passed to the LLM along with the original question.

- The LLM uses this retrieved context to generate a more accurate, specific, and well-grounded answer, reducing hallucinations.

Benefits of using ontologies in RAG - Improved accuracy: By using a structured ontology, the RAG system can retrieve precise, fact-based context, leading to more accurate LLM responses.

- Enhanced reasoning: Ontologies enable the LLM to make better-reasoned connections between different pieces of information, which is especially useful for complex queries.

- Increased transparency: The system can attribute its answers back to the specific facts and relationships in the ontology, making the source of information more verifiable.

- Domain-specific knowledge: Integrating an ontology allows the LLM to access and use detailed, specific knowledge from a particular domain that it wasn’t originally trained on

The Role of Ontologies with LLMs - Enterprise Knowledge

参考url The Role of Ontologies with LLMs - Enterprise Knowledge

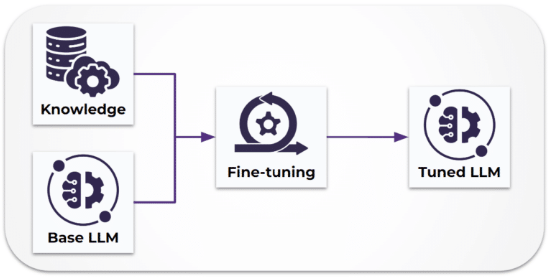

使用本体直接tuning LLM

使用ON-RAG 为LLM提供输入

ON-RAG:OG-RAG: Ontology-Grounded Retrieval-Augmented Generation For Large Language Models

参考:

1.论文: OG-RAG: Ontology-Grounded Retrieval-Augmented Generation For Large Language Models

2. 微软实现: GitHub - microsoft/ograg2: OGRAG - Release Version

3. 亚信科技文章: 本体与LLMs的协同赋能研究综述

火山引擎开发者社区是火山引擎打造的AI技术生态平台,聚焦Agent与大模型开发,提供豆包系列模型(图像/视频/视觉)、智能分析与会话工具,并配套评测集、动手实验室及行业案例库。社区通过技术沙龙、挑战赛等活动促进开发者成长,新用户可领50万Tokens权益,助力构建智能应用。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)