Kubernetes部署Deepseek(内网环境)

n\u003c/think\u003e\n\n**解答:**\n\n我们来比较一下 \\(3{.}11\\) 和 \\(3{.}3\\) 的大小。\n\n1. **比较整数部分:**\n\\[\n3 \\quad (3{.}11) \\quad \\text{和} \\quad 3 \\quad (3{.}3)\n\\]\n整数部分相同,都为3。如果这里创建的PV不对,ollama-models-

部署Kubernetes集群

略

启动Ollama Operator

官网:https://ollama-operator.ayaka.io/pages/en/guide/getting-started/

#需要科学上网访问github下载:

https://raw.githubusercontent.com/nekomeowww/ollama-operator/v0.10.1/dist/install.yaml

#需要科学上网下载Ollama Operator依赖的两个Image:

ghcr.io/nekomeowww/ollama-operator:0.10.1

gcr.io/kubebuilder/kube-rbac-proxy:v0.15.0

Install operator:

kubectl apply --server-side=true -f install.yamlWait for the operator to be ready:

kubectl wait \

-n ollama-operator-system \

--for=jsonpath='{.status.readyReplicas}'=1 deployment/ollama-operator-controller-manager如下回显说明准备就绪:

deployment.apps/ollama-operator-controller-manager condition met

kubectl get all -n ollama-operator-system

NAME READY STATUS RESTARTS AGEpod/ollama-operator-controller-manager-c896f8956-97tpv 2/2 Running 2 3d1h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/ollama-operator-controller-manager-metrics-service ClusterIP 10.254.135.104 <none> 8443/TCP 3d1h

NAME READY UP-TO-DATE AVAILABLE AGEdeployment.apps/ollama-operator-controller-manager 1/1 1 1 3d1h

NAME DESIRED CURRENT READY AGEreplicaset.apps/ollama-operator-controller-manager-c896f8956 1 1 1 3d1h

K8s部署大模型

在K8s上部署大模型,可以直接用Ollama Operator的CRD Model资源部署。

Kubernetes上创建所需存储

创建StorageClass:首次部署大模型,会先创建一个store 服务,用于存储ollama的模型文件,store服务会用到PV,需要事先准备好。

kubectl apply -f storage-class.yamlkubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path kubernetes.io/no-provisioner Delete WaitForFirstConsumer true 3d

storage-class.yaml:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

provisioner: kubernetes.io/no-provisioner # 使用静态 Provisioner

volumeBindingMode: WaitForFirstConsumer # 等待 Pod 调度后再绑定卷

reclaimPolicy: Delete # 卷回收策略(Delete、Retain)

allowVolumeExpansion: true # 允许卷动态扩展创建PV:spec.capacity.storage 大小必须是100G,因为ollama-models-store默认引用的PV就是100G,并且未找到可以修改的地方。如果这里创建的PV不对,ollama-models-store创建的PVC将找不到可以绑定的PV,而一直pending。

| 访问模式 | 定义 | 特点 |

|---|---|---|

| ReadWriteOnce | 卷可以被单个节点以读写方式挂载。 | - 适用于需要独占访问的场景- 常用于单副本应用- 大多数存储系统都支持 |

| ReadOnlyMany | 卷可以被多个节点以只读方式挂载。 | - 适用于内容分发场景- 适合共享只读数据- 支持的存储系统较多 |

| ReadWriteMany | 卷可以被多个节点以读写方式挂载。 | - 适用于需要多副本读写访问的场景- 对存储系统要求较高- 支持的存储系统有限 |

kubectl apply -f pv.yamlkubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

local-pv-node189 100Gi RWO Delete Bound default/ollama-models-store-pvc local-path <unset> 2d15h

local-pv-node191 100Gi RWO Delete Available local-path <unset> 2d15h

kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

ollama-models-store-pvc Bound local-pv-node189 100Gi RWO local-path <unset> 2d15h

pv.yaml:

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv-node189

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-path

hostPath:

path: /data/ # 本地存储路径

type: Directory

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s130-node189 # 替换为你的节点名称启动Deepseek-r1 1.5b

kubectl apply -f deepseek-r1-1.5b.yaml 因为deepseek-r1-1.5b.yaml 中定义的image地址可能不生效。此时,需要进行如下处理

1、编辑statefulset/ollama-models-store

kubectl edit statefulset/ollama-models-store

或者

kubectl describe po/ollama-models-store-0 将

image: ollama/ollama

imagePullPolicy: Always

替换成:

image: 192.168.xxx.101/library/ollama:latest

imagePullPolicy: IfNotPresent

2、编辑deploy/ollama-model-deepseek-r1同上处理

kubectl edit deploy ollama-model-deepseek-r1删除旧的replicaset

kubectl get replicaset

NAME DESIRED CURRENT READY AGE

replicaset.apps/ollama-model-deepseek-r1-644575b7bf 1 1 0 2m55s

replicaset.apps/ollama-model-deepseek-r1-74866c698c 1 1 0 6m15s

kubectl delete replicaset.apps/ollama-model-deepseek-r1-74866c698c

执行kubectl apply -f deepseek-r1-1.5b.yaml命令的创建流程大致如下:

1、首先会创建statefulset/ollama-models-store,调度启动pod/ollama-models-store-0;同时创建:service/ollama-models-store;

pod/ollama-models-store-0启动/bin/ollama serve服务

2、当pod/ollama-models-store准备好,则会创建deployment.apps/ollama-model-deepseek-r1,调度启动pod,首先initContainers,拉取大模型:执行apt update && apt install curl -y && ollama pull deepseek-r1 && curl http://ollama-models-store:11434/api/generate -d '{"model": "%!s(MISSING)"}'deepseek-r1:1.5b

3、initContainers成功后启动,则会创建pod/ollama-model-deepseek-r1-644575b7bf-sstjd ;同时创建service/ollama-model-deepseek-r1

kubectl get all

NAME READY STATUS RESTARTS AGEpod/ollama-model-deepseek-r1-644575b7bf-sstjd 1/1 Running 0 2d15hpod/ollama-models-store-0 1/1 Running 0 2d15h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 191dservice/ollama-model-deepseek-r1 ClusterIP 10.254.223.236 <none> 11434/TCP 2d15hservice/ollama-models-store ClusterIP 10.254.182.20 <none> 11434/TCP 2d15h

NAME READY UP-TO-DATE AVAILABLE AGEdeployment.apps/ollama-model-deepseek-r1 1/1 1 1 2d15h

NAME DESIRED CURRENT READY AGEreplicaset.apps/ollama-model-deepseek-r1-644575b7bf 1 1 1 2d15h

NAME READY AGEstatefulset.apps/ollama-models-store 1/1 2d15h

## 清理大模型:仅仅delete -f deepseek-r1-1.5b.yaml 并不行。

kubectl delete -f deepseek-r1-1.5b.yaml

kubectl delete StatefulSet/ollama-models-store

kubectl delete pvc/ollama-models-store-pvcdeepseek-r1-1.5b.yaml :

apiVersion: ollama.ayaka.io/v1

kind: Model

metadata:

name: deepseek-r1

spec:

replicas: 1

image: deepseek-r1:1.5b

imagePullPolicy: IfNotPresent

storageClassName: local-path

persistentVolume:

accessMode: ReadWriteOnce

podTemplate:

spec:

initContainers:

- args:

name: ollama-image-pull

image: 192.168.xxx.101/library/ollama:latest

imagePullPolicy: IfNotPresent

containers:

- args:

name: server

image: 192.168.xxx.101/library/ollama:latest

imagePullPolicy: IfNotPresent调用Deepseek-r1 1.5b

curl http://10.254.56.37:11434/v1/chat/completions -H "Content-Type: application/json" -d '{

"model": "deepseek-r1:latest",

"messages": [

{

"role": "user",

"content": "Hello! 比较3.11和3.3的大小?"

}]}'{"id":"chatcmpl-430","object":"chat.completion","created":1745992155,"model":"deepseek-r1:latest","system_fingerprint":"fp_ollama","choices":[{"index":0,"message":{"role":"assistant","content":"\u003cthink\u003e\n首先,比较两个小数的整数部分。两个数都为3,说明整数部分相同。\n\n接着,比较十分位。第一个数是0.11,第二个数是0.3。明显0.1小于0.3。\n\n因此,可以得出3.11小于3.3。\n\u003c/think\u003e\n\n**解答:**\n\n我们来比较一下 \\(3{.}11\\) 和 \\(3{.}3\\) 的大小。\n\n1. **比较整数部分:**\n \\[\n 3 \\quad (3{.}11) \\quad \\text{和} \\quad 3 \\quad (3{.}3)\n \\]\n 整数部分相同,都为3。\n\n2. **比较十分位:**\n - \\(3{.}11\\) 的十分位是\\(1\\)\n - \\(3{.}3\\) 的十分位是\\(3\\)\n\n 这里,\\(1 \u003c 3\\),因此:\n \\[\n 3{.}11 \u003c 3{.}3\n \\]\n\n**最终答案:**\n\\[\n\\boxed{3{.}11 \u003c 3{.}3}\n\\]"},"finish_reason":"stop"}],"usage":{"prompt_tokens":20,"completion_tokens":262,"total_tokens":282}}

集成Open WebUI on Kubernetes

创建openwebui所需的PV

kubectl apply -f pv4openwebui.yaml

kubectl apply -f pvc4openwebui.yamlpv4openwebui.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: open-webui-node191

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-path

hostPath:

path: /data/open_webui # 本地存储路径

type: Directory

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s130-node191 # 替换为你的节点名称pvc4openwebui.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: open-webui-pvc

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi启动openwebui

kubectl apply -f open-webui-deploy.yaml open-webui-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: open-webui

spec:

replicas: 1

selector:

matchLabels:

app: open-webui

template:

metadata:

labels:

app: open-webui

spec:

restartPolicy: Always

containers:

- name: myweb

image: 192.168.XXX.101/library/open-webui:main

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

env:

- name: OLLAMA_BASE_URL

value: 'http://ollama-model-deepseek-r1:11434'

volumeMounts:

- name: open-webui-data

mountPath: /app/backend/data

volumes:

- name: open-webui-data

persistentVolumeClaim:

claimName: open-webui-pvc

---

apiVersion: v1

kind: Service

metadata:

name: open-webui-service

spec:

type: NodePort

ports:

- protocol: TCP

port: 8080

nodePort: 31000

selector:

app: open-webui使用Deepseek

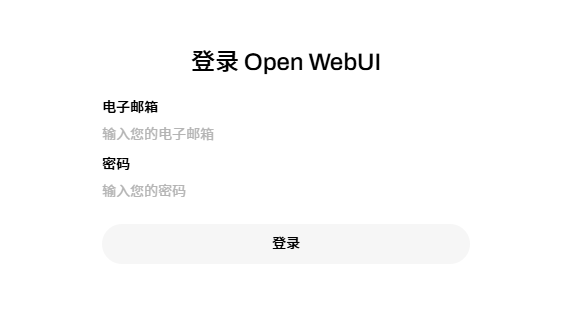

浏览器访问http://node-ip:31000;按照如下提示先注册账号

账号用于登录WEBUI,然后,我们就可以使用本地的Deepseek了:

火山引擎开发者社区是火山引擎打造的AI技术生态平台,聚焦Agent与大模型开发,提供豆包系列模型(图像/视频/视觉)、智能分析与会话工具,并配套评测集、动手实验室及行业案例库。社区通过技术沙龙、挑战赛等活动促进开发者成长,新用户可领50万Tokens权益,助力构建智能应用。

更多推荐

已为社区贡献7条内容

已为社区贡献7条内容

所有评论(0)